I’ve been watching the developments with Matthew Jockers’s Syuzhet package and blog posts with interest over the last few months. I’m always excited to try new tools that I can bring into both the classroom and my own research. For those of you who are just now hearing about it, Syuzhet is a package for extracting and plotting the “emotional trajectory” of a novel.

The Syuzhet algorithm works as follows: First, you take the novel and split it up into sentences. Then, you use sentiment analysis to assign a positive or negative number to each sentence indicating how positive the sentence is. For example, “I’m happy” and “I like this” would have positive numbers, while “This is terrible” and “Everything is awful” would get negative numbers. Finally, you smooth out these numbers to get what Jockers calls the “foundation shape” of the novel, a smooth graph of how emotion rises and falls over the course of the novel’s plot.

This is an interesting idea, and I installed the package to try it out, but I’ve encountered several substantial problems along the way that challenge Jockers’s conclusion that he has discovered “six, or possibly seven, archetypal plot shapes” common to novels. I communicated privately with him about some of these issues last month, and I hope these problems will be addressed in the next version of the package. Until then, users should be aware that the package does not work as advertised.

I’ll proceed step-by-step through the process of using the package, explaining the problems at each step.

1. Splitting Sentences

The first step of the algorithm is to split the text into sentences using Syuzhet’s “get_sentences” function. I tried running this on Charles Dickens’s Bleak House, and immediately ran into trouble: in many places, especially around dialogue, Syuzhet incorrectly interpreted multiple sentences as being just one sentence. This seemed to be particularly common around quotation marks. For example, here’s one “sentence” from the middle of Chapter III, according to Syuzhet:[1]

Mrs. Rachael, I needn’t inform you who were acquainted with the late Miss Barbary’s affairs, that her means die with her and that this young lady, now her aunt is dead–”

“My aunt, sir!”

“It is really of no use carrying on a deception when no object is to be gained by it,” said Mr. Kenge smoothly, “Aunt in fact, though not in law.

As you can imagine, these grouping errors are likely to cause problems for works with extensive dialogue (such as most novels and short stories).[2]

2. Assigning Value to Words

The second step is to compute the emotional valence of each sentence, a problem known as sentiment analysis. The Syuzhet package provides four options for sentiment analysis: “Bing”, “AFINN”, “NRC”, and “Stanford”; “Bing” is the default, and is what Jockers recommends in his documentation.

“Bing,” “AFINN,” and “NRC” are all simple lexicons: each is a list of words with a precomputed positive or negative “score” for each word, and Syuzhet computes the valence of a sentence by simply adding together the scores of every word in it.

This approach has a number of drawbacks:

- Since each word is scored in isolation, it can’t process modifiers. This means firstly that intensifiers have no effect, so that adding “very” or “extremely” won’t change the valence, and secondly (and more worryingly) that negations have no effect. Consequently, the sentence “I am not happy today” has exactly the same positive valence as “I am extremely happy today” or just “I’m happy.”

- For the same reason, the algorithm can’t take the multiple meanings of words into consideration, so words such as “well” and “like” are often marked as positive, even when they’re used in neutral ways. The “Bing” lexicon, for example, considers the sentence “I am happy” to be less positive than the sentence “Well, it’s like a potato.”[3]

- All three lexicons primarily contain contemporary English words, because they were developed for analyzing modern documents like product reviews and tweets. As a result, words of dialect may produce neutral values regardless of their actual emotional valence, and words whose meanings have changed since the Victorian period may have scores that do not at all reflect their use in the text. For example, “noisome,” “odours,” “execrations,” and “sulphurous” are negative words in Portrait of the Artist but are not negative in Bing’s lexicon.

- Syuzhet’s particular implementation of this approach only counts a word once for a given sentence even if it’s repeated, so that e.g. “I am happy–so happy–today” has the same valence as “I am happy today.”

- These lexicons also do not provide much nuance: Bing and NRC assign every word a value of -1 (negative terms), 0 (neutral terms), or 1 (positive terms). Thus, the two sentences “This is decent” and “This is wonderful!” both have valence 1, even though the second is clearly much more positive.

To demonstrate some of these problems, I composed the following simple paragraph:

I haven’t been sad in a long time.

I am extremely happy today.

It’s a good day.

But suddenly I’m only a little bit happy.

Then I’m not happy at all.

In fact, I am now the least happy person on the planet.

There is no happiness left in me.

Wait, it’s returned!

I don’t feel so bad after all!

According to common sense, we’d expect the sentiment assigned to these sentences to start off fairly high, then decline rapidly from lines 4 to 7, and finally return to neutral (or slightly positive) at the end.

Using the Syuzhet package, we get the following sentiment trajectory:

The emotional trajectory does pretty much exactly the opposite of what we expected. It starts negative, because “I haven’t been sad in a long time” contains only one word with a recognized value, which is “sad.” Then it rises to be at the same level of positivity for the next few lines, because “I am extremely happy today.” and “There is no happiness left in me” are equally positive. At the end, as the narrative turns hopeful again, Syuzhet’s trajectory drops back to negative because it detected the word “bad” in the sentence. [4]

This example showcases a number of the weaknesses of this sentiment analysis strategy on very straightforward text; I expect that these problems will be far worse for novels that contain emotion implied though metaphors or imagery patterns, or use satire and sarcasm (e.g. most works by Jane Austen, Jonathan Swift, Mark Twain, or Oscar Wilde), irony, or an unreliable narrator (e.g. much of postmodern literature).

Essentially, the Syuzhet package creates graphs of word frequency grouped by theme (positive and negative) throughout a text more than it does graphs of emotional valence in a text.

3. Foundation Shapes

The final step of Syuzhet is to turn the emotional trajectory into a Foundation Shape–a simplified graph of the story’s emotional valence that (hopefully) echoes the shape of the plot. But once again, I found some problems. Syuzhet produces the Foundation Shape by putting the emotional trajectory through an ideal low-pass filter, which is designed to eliminate the noise of the trajectory and smooth out its extremes. Ideal low-pass filters work by approximating the function with a fixed number of sinusoidal waves; the smaller the number of sinusoids, the smoother the resulting graph will be.

However, ideal low-pass filters often introduce extra lobes or humps in parts of the graph that aren’t well-approximated by sinusoids. These extra lobes are called ringing artifacts, and will be larger when the number of sinusoids is lower.

Here’s a simple example:

The graph on the left is the original signal, and the graph on the right demonstrates the ringing artifacts caused by a low-pass filter (specifically, by zeroing all but the first five terms of the Fourier transform). The original signal just has one lobe in the middle, but the low-pass filter introduces extra lobes on either side.

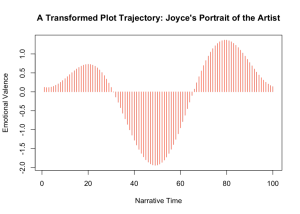

By default, Syuzhet uses an even lower cutoff than the example above (keeping only three Fourier terms). Consequently, we should expect to find inaccurate lobes in the resulting foundation shapes. The Portrait of the Artist foundation shape that Jockers presented in his post “Revealing Sentiment and Plot Arcs with the Syuzhet Package” already shows this: [5]

The full trajectory opens with a largely flat stretch and a strong negative spike around x=1100 that then rises back to be neutral by about x=1500. The foundation shape, on the other hand, opens with a rise, and in fact peaks in positivity right around where the original signal peaks in negativity. In other words, the foundation shape for the first part of the book is not merely inaccurate, but in fact exactly opposite the actual shape of the original graph.

This is a pretty serious problem, and it means that until Syuzhet provides filters that don’t cause ringing artifacts, it is likely that most foundation shapes will be inaccurate representations of the stories’ true plot trajectories. Since the foundation shape may in places be the opposite of the emotional trajectory, two foundation shapes may look identical despite having opposing emotional valences. Jockers’s claim that he has derived “the six/seven plot archetypes” of literature from a sample of “41,383 novels” may be due more to ringing artifacts than to an actual similarity between the emotional structures of the analyzed novels.

While Syuzhet is a very interesting idea, its implementation suffers from a number of problems, including an unreliable sentence splitter, a sentiment analysis engine incapable of evaluating many sentences, and a foundation shape algorithm that fundamentally distorts the original data. Some of these problems may be fixable–there are certainly smoothing filters that don’t suffer from ringing artifacts[6]–and while I don’t know what the current state of the art in sentence detection is, I imagine algorithms exist that understand quotation marks. The failures of sentiment analysis, though, suggest that Syuzhet’s goals may not be realizable with existing tools. Until the foundation shapes and the problems with the implementation of sentiment analysis are addressed, the Syuzhet package cannot accomplish what it claims to do. I’m looking forward to seeing how these problems are addressed in future versions of the package.

Special Thanks:

I’d like to thank the following people who have consulted with me on sentiment analysis and signal processing and read versions of this blog post.

Daniel Lepage, Senior Software Engineer, Maternity Neighborhood

Rafael Frongillo, Postdoctoral Fellow, Center for Research on Computation and Society, Harvard University

Brian Gawalt, Senior Data Scientist, Elance-oDesk

Sarah Gontarek

[1] The excerpt doesn’t include quotation marks at the beginning and end because both the opening and closing sentences are part of larger passages of dialogue.

[2] This problem was not visible with the sample dataset of Portrait of the Artist, because the Project Gutenburg text uses dashes instead of quotation marks.

[3] This example also shows another problem: longer sentences may be given greater positivity or negativity than their contents warrant, merely because they have greater number of positive or negative words. For instance, “I am extremely happy!” would have a lower positivity ranking than “Well, I’m not really happy; today, I spilled my delicious, glorious coffee on my favorite shirt and it will never be clean again.”

[4] The Stanford algorithm is much more robust: it has more granularity in its categories of emotion and does consider negation. However, it also fails on the sample paragraph above, and it produced multiple “Not a Number” values when we ran it on Bleak House, rendering it unusable.

[5] Other scholars have also been noticing similar problems, as Jonathan Goodwin’s results demonstrate: (https://twitter.com/joncgoodwin/status/563734388484354048/photo/1).

[6] For example, Gaussian filters do not introduce ringing artifacts, though they have their own limitations (http://homepages.inf.ed.ac.uk/rbf/HIPR2/freqfilt.htm).

Hi Annie,

Good stuff here, thanks. A couple of things that you and your readers might wish to consider. . .

1. The problem you note with sentence detection has more to do with the current state of the art in sentence parsing than with the syuzhet package. The package implements the openNLP parser for sentence boundary detection. If you use the “stanford” method, you get the Stanford parser, but it isn’t hugely better. Generally these parsers are good enough. Will there be some misses? Yes, you bet. But over a long novel these misses are not all that important, especially so when you consider that the goal here is not to capture perfect sentence level sentiment, but rather to capture the general chronological flow of sentiment over the course of a book. If you read the footnote on my last post, you’ll see where I identify what I think is a far more serious problem than the missing of a sentence boundary or two. (In some prior experiments, btw, I used 1000 word segments instead of sentences and that work produced very similar results. The important thing is whether or not the generalized shapes–percentage based or transformed–match what we know, as readers, about the emotional trajectories in the books. Might be worth another look at my footnote #1)

2. The problem you note with sentiment detection accuracy has more to do with the current state of the art in sentiment analysis than with the syuzhet package. I have implemented four of best approaches (of those that are open source –i.e. not LIWC) from the existing literature on the subject. The Stanford sentiment parser currently holds the distinction of being the best, but only by a small margin. Current benchmark studies suggest that accuracy for all of these is in the 70-80% range and that depends on genre; it’s not an easy problem. As far as negation, irony, and such things, I do have a note about that in my post as well. The Stanford parser handles these better than the others, but still not perfectly. Since most books are not sustained irony or negation, all of the methods tend to work well in most cases (again, footnote #1).

3. Your point about the filtering and possible ringing effects, that we already discussed over email, is most interesting, and I’ll be exploring that and working on implementing some other filtering options in the future. In the mean time, you are very welcome to fork the code on github and suggest some improvements!

Many Thanks,

Matt

LikeLike

[…] March 2: Annie Swafford offers an interesting critique of this work on her blog. Her post includes some comments from me […]

LikeLike

Hi Matt,

Great to hear from you, and thanks for the detailed response!

1. I agree that sometimes it’s not a problem if the sentence boundaries are off. However, since the openNLP parser is particularly bad at dialogue, it’s important to note that results may be skewed for novels or short stories (and this shouldn’t be surprising because the parser was trained on tweets and product reviews that are unlikely to have quotation marks). Given that the package works by adding up the positive and negative words in a sentence, it’s likely that these incorrectly long sentences could produce large positive or negative spikes that could affect the foundation shape. Also, your get_sentences function doesn’t use the Stanford parser: only get_sentiment has that option, and it requires that the input is already split into sentences, according to your documentation on GitHub.

2. I was pleased when I first read your most recent blog post that you’d addressed some of the potential errors of sentiment analysis in your first footnote. However, I’m suggesting that some texts use irony and dark humor for more extended periods than you suggest in that footnote—an assumption that can be tested by comparing human-annotated texts with the Syuzhet package.

Regardless, the bigger problem is that sentiment analysis as it is implemented in the Syuzhet package does not correctly identify the sentiment of sentences. Sentiment analysis based solely on word-by-word lexicon lookups is really not state-of-the-art at all: even the lexicons themselves make it clear that they shouldn’t be used this way. AFINN, for example, has specific entries from bigrams like “don’t like” or “not good”, but your implementation of it still evaluates one word at a time, so it cannot take advantage of those features.

I would expect the Stanford method to provide better results overall, but when I tried to generate a foundation shape using it I got a vector of nothing but NA, so I didn’t pursue that any further; getting the Stanford package to work consistently would go a long way towards addressing some of these issues.

Thanks again for the response, and I look forward to seeing how these problems are addressed in future versions.

LikeLike

[…] Source: Problems with the Syuzhet Package | Anglophile in Academia: Annie Swafford’s Blog […]

LikeLike

[…] Annie Swafford has raised a couple of interesting points about how the syuzhet package works to estimate the emotional trajectory in a novel, a trajectory which I have suggested serves as a handy proxy for plot (in the spirit of Kurt Vonnegut). […]

LikeLike

What an important and valuable contribution to ongoing conversations about text analysis and literary studies–not just because of its thoughtful, specific, and generous reading of the Syuzhet Package for R, but also because the way in which you’ve gone about writing it up. Your responses and careful close readings of the algorithms and methodologies help move conversations around computational criticism beyond the complaint that it’s merely a matter of parlor tricks–smoke and mirror with numbers–by treating the package with respect while exploring where it breaks down.

I would be interested to hear more about your thoughts on the way sentence-level errors in detecting sentiment present problems in the aggregate. Have you had time to reflect further on how these errors might build on themselves to continue to cause concern at scale? This seems to me to be a pretty common fall back response in text analysis. Often, comments reflect an attitude of “Oh, well, it all comes out in the wash at scale…” But does it always? I think that your lines of inquiry are an important part of moving beyond that unsatisfying fall back. In my own experience working with corpora of poetry and LDA, I feel like sentence-level discrepancies are not so easily dismissed at scale. That, in turn, has led me to wonder if we may be too quick to accept the same in prose.

I will add that to say that the problems with the package are not dissimilar to the problems with sentiment analysis at large is a bit like saying that the problem with sauce is that we made it with rotten tomatoes: It’s perfectly fine sauce…and whether or not the ingredients were good in the first place is none of our concern. Of course, I wouldn’t characterize sentiment analysis as “rotten” at all, but problematic and unsettled is a given. Then again, it is the life blood of our work in literary studies to contend with the challenges language presents to the rigid assumptions of algorithmic logic, is it not? So this seems to me an opportunity to embrace “problems” with the Suyzet Package as a feature rather than a flaw unless, of course, we hope somehow to “solve” literature… which would take a rather dim view of the potential for “algorithmic criticism”– Stephen Ramsay’s phrase which I’m quite fond of particularly because it revels in uncertainties rather than looking to computation to settle them.

For fear of going on and on here, I’d just like to say that I’d be particularly interested to hear more about what you’ve discovered, and I hope that there’s another blog post to come! Thanks for such a thoughtful reading, and I look forward to hearing more.

LikeLiked by 2 people

Annie: I was thrilled to see your blog posting because I have serious qualms about sentiment analysis. They began when I was working with a computer science student whose native language is not English. He ran a poem through a sentiment analysis program, assigning red to negative and blue to positive emotions, analyzing the poem by word. I can’t seem to paste the resulting picture into this reply, but each word was assigned a definite value. I was horrified. Why? The poem was “Jabberwocky.” How could value be assigned to nonsense words that are not even in the dictionary? I’m not certain which sentiment analysis tool he used, but my sense from the experiment is that these programs can be very, very misleading. Your posting here is so powerful because the novels we care about most certainly would not use straight declarations of sentiment–anything by Henry James would be impossible to analyze in this way, let alone postmodern irony, the noir atmosphere of cyberpunk, etc.

LikeLiked by 1 person

[…] engagement with dramatic arcs in this post, but they are interesting reads nonetheless. (Followup: Annie Swafford’s blog post on Jockers’ analyses are worth a read as […]

LikeLiked by 1 person

[…] archetypal plot shapes” (also known as foundation shapes) in any novel. Earlier this week, I posted a response, documenting some potential issues with the package, including problems with the sentence splitter, […]

LikeLike

[…] how computers aren’t taking over the world): Plot/Sentiment Analysis with Syuzhet (Jockers); response by Annie […]

LikeLike

[…] in novels using sentiment analysis. A lot of buzz especially has revolved around Matt Jockers and Annie Swafford, and the discussion has bled into larger academia and inspired 246 (and counting) comments on […]

LikeLike

[…] lot of discussion around Matt Jockers’ Syuzhet package (involving Annie Swafford, Ted Underwood, Andrew Piper, Scott Weingart and many others) has focused on issues of validity […]

LikeLike

[…] me. This is the foundation shape plot I got by applying the tool on the first book. Prompted by Annie Swafford’s observation on the problems of this analysis I decided to compare the plots obtained with different values of […]

LikeLike

[…] involves using a Fourier transform and low-pass filter, is appropriate. Annie Swafford has produced several compelling arguments that this strategy doesn’t work. And Ted Underwood has responded with […]

LikeLike

[…] one of the most fascinating controversies of recent years in DH, as DH scholar Annie Swafford raised questions about Matthew Jockers’s tool Syuzhet. Jockers had set out to build on Kurt Vonnegut’s […]

LikeLike

[…] plot shapes using sentiment analysis. After Jockers first announced Syuzhet, Annie Swafford wrote a pointed critique of the method, and over the course of roughly one month the two literary scholars debated the validity of the […]

LikeLike

[…] that the filter was artificially distorting the edges of the plots, and prior to Ben’s post, Annie Swafford had argued that the method was producing an unacceptable “ringing” artifact. Within […]

LikeLike

[…] Problem/Limitations of syuzhet:- https://annieswafford.wordpress.com/2015/03/02/syuzhet/ […]

LikeLike

[…] work on her own tweets. For further reading and a more critical view I would also suggest Annie Swafford‘s […]

LikeLike

[…] Swafford, Joanna. “Problems with the Syuzhet Package.” Anglophile in Academia: Annie Swafford’s Blog, March 2, 2015. See: https://annieswafford.wordpress.com/2015/03/02/syuzhet/ […]

LikeLike

[…] Annie Swafford, “Problems with the Syuzhet Package,” Anglophile in Academia: Annie Swaf… […]

LikeLike